When Your Custom AI Becomes the Attack Vector – Why Building Secure-by-Design Matters Just as Much as Speed to Market.

Enterprises across every sector are rushing to build custom AI capabilities; from internal chatbots and AI agents that streamline operations to customer-facing agents that transform service delivery. Yet whilst organisations focus on capturing AI’s transformative potential, many are inadvertently expanding their attack surface and introducing risks that didn’t exist just months ago.

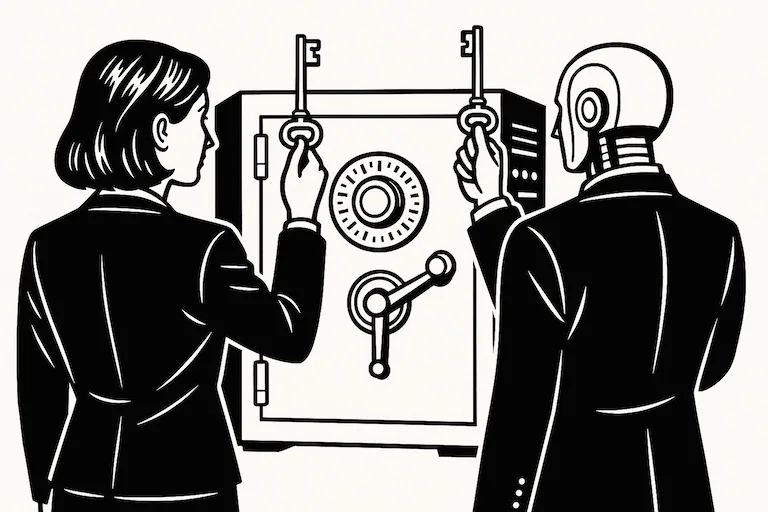

This shift represents a fundamental change in how we must approach cybersecurity. AI innovation is no longer just a technology consideration. It’s become a competitive imperative that directly impacts market position. The organisations that can innovate fastest whilst maintaining robust security will build insurmountable advantages over those that either move too slowly or compromise on security in order to deploy quickly.

Beyond Third-Party AI: The Custom Development Challenge

The ‘Shadow AI’ challenge remains significant, but this article focuses on a different frontier: the security implications of developing and deploying your own large language model applications and autonomous agents.

These custom AI systems, whether internal tools for employees or customer-facing applications, introduce entirely new categories of risk. Unlike third-party AI services with established security and data loss prevention measures, custom implementations require organisations to build security considerations into every layer of their AI stack.

To continue reading the article, please click here.